WikiBrain’s busy thinking up its first public release. Please be patient while we fine tune our APIs and complete our documentation. Ask us questions at the WikiBrain google group!

The WikiBrain Java library enables researchers and developers to incorporate state-of-the-art Wikipedia-based algorithms and technologies in a few lines of code.

If you’d like to cite WikiBrain, please use: Sen, Shilad, Toby Jia-Jun Li, WikiBrain Team, and Brent Hecht. “WikiBrain: Democratizing computation on Wikipedia.” In Proceedings of The International Symposium on Open Collaboration, p. 27. ACM, 2014. [pdf]

WikiBrain is easy to use. Wikipedia data can be downloaded, parsed, and imported into a database by running a single command. WikiBrain allows you to incorporate state-of-the art algorithms in your Java projects in just a few lines of code.

WikiBrain is multi-lingual. WikiBrain supports all 267 Wikipedia language editions, and builds a concept-map that connects an article in one language to the same article in another langauge.

WikiBrain is fast. WikiBrain uses single-machine parallelization (i.e. multi-threading) for all computationally intensive features. While it imports data into standard SQL databases (h2 or Postgres), it builds optimized local caches for critical data.

WikiBrain integrates a variety of specific algorithms and datasets in one framework, including:

- Semantic-relatedness algorithms that measure the strength of association between two concepts such as “racecar” and “engine.”

- GeoSpatial algorithms for spatial Wikipedia pages like Minnesota and the Eiffel Tower.

- Wikidata: Support for structured Wikidata “facts” about articles.

- Pageviews: Public data about how often Wikipedia pages are viewed with hourly precision.

An example program

Once you have imported data, you can write programs that analyze Wikipedia. Here’s a simple example you can find in the Cookbook:

// Prepare the environment

Env env = EnvBuilder.envFromArgs(args);

// Get the configurator that creates components and a phraze analyzer from it

Configurator configurator = env.getConfigurator();

PhraseAnalyzer pa = configurator.get(PhraseAnalyzer.class, "anchortext");

LocalPageDao pageDao = configurator.get(LocalPageDao.class);

// get the most common phrases in simple

LinkedHashMap<LocalId, Float> resolution = pa.resolve(Language.SIMPLE, "Apple", 20);

// show the closest pages

System.out.println("resolution of apple");

if (resolution == null) {

System.out.println("\tno resolution !");

} else {

for (LocalId p : resolution.keySet()) {

Title title = pageDao.getById(p).getTitle();

System.out.println("\t" + title + ": " + resolution.get(p));

}

}

When you run this program, you’ll see output:

resolution of apple

LocalPage{nameSpace=ARTICLE, title=Apple, localId=39, language=Simple English}: 0.070175424

LocalPage{nameSpace=ARTICLE, title=Apple juice, localId=19351, language=Simple English}: 0.043859642

LocalPage{nameSpace=ARTICLE, title=Apple Macintosh, localId=517, language=Simple English}: 0.043859642

LocalPage{nameSpace=ARTICLE, title=Apple Inc., localId=7111, language=Simple English}: 0.043859642

LocalPage{nameSpace=ARTICLE, title=Apple A4, localId=251288, language=Simple English}: 0.043859642

About

WikiBrain development is led by Shilad Sen at Macalester College and Brent Hecht at the University of Minnesota. WikiBrain has been generously supported by the National Science Foundation, Macalester College, the Howard Hughes Medical Institute, and the University of Minnesota. WikiBrain is licensed under the Apache License, version 2.

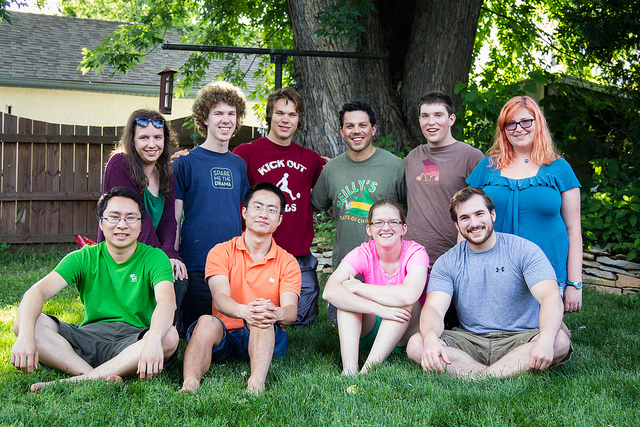

Macalester WikiBrain development team, Summer 2013

WikiBrain has been made possible through substantial contributions by many students, including: Alan Morales Blanco, Margaret Giesel, Rebecca Gold, Becca Harper, Ben Hillman, Sam Horlbeck, Aaron Jiang, Matthew Lesicko, Toby Li, Yulun Li, Huy Mai, Ben Mathers, Sam Naden, Jesse Russell, Laura Sousa Vonessen, Zixiao Wang, and Ari Weilland